About

Parquet is a read-optimized encoding format (write once, read many) for columnar tabular data

Parquet is built from the ground up with complex nested data structures and implements the record shredding and assembly algorithm described by Google engineers in their paper Dremel: Interactive Analysis of Web-Scale Datasets.

Parquet is a per-column encoding that results in a high compression ratio and smaller files. Parquet files also leverage compression techniques that allow files to be loaded in parallel.

It's a native Hadoop format.

Format

High Level

- Block are hdfs block (Hadoop)

- The file format is designed to work well on top of hdfs.

- File: A hdfs file

- Row group: A logical horizontal partitioning of the data into rows.

- Column chunk: A chunk of the data for a particular column.

- Page: Column chunks are divided up into pages. A page is conceptually an indivisible unit (in terms of compression and encoding).

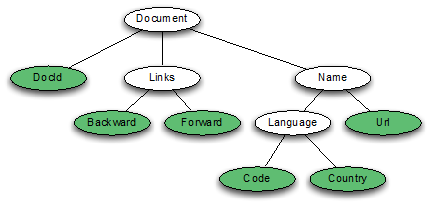

Nested data structures

To continue ! Format explained in details !

| Nested Structure | Column Translation |

|---|---|

| docid links.backward links.forward name.language.code name.language.country name.url |

Borrowed from the Google Dremel paper

The model is minimalistic in that it represents nesting using groups of fields and repetition using repeated fields. There is no need for any other complex types like Maps, List or Sets as they all can be mapped to a combination of repeated fields and groups.

Compact format

- type aware encodings

- better compression

Parquet allows compression schemes to be specified on a per-column level.

Optimized I/O

- Projection push down (column pruning)

- Predicate push down (filters based on stats)

Distribution

API

The Parquet-format project contains all Thrift definitions that are necessary to create readers and writers for Parquet files. It contains format specifications and Thrift definitions of metadata required to properly read Parquet files.

Data Type

SQL Type to Parquet Type.

| SQL Type | Parquet Type | Description |

|---|---|---|

| BIGINT | INT64 | 8-byte signed integer |

| BOOLEAN | BOOLEAN | TRUE (1) or FALSE (0) |

| N/A | BYTE_ARRAY | Arbitrarily long byte array |

| FLOAT | FLOAT | 4-byte single precision floating point number |

| DOUBLE | DOUBLE | 8-byte double precision floating point number |

| INTEGER | INT32 | 4-byte signed integer |

| VARBINARY(12)* | INT96 | 12-byte signed int |

Library / Tool

Platform

You can read and write parquet with these platforms:

- Athena,

- EMR,

- Machine learning vendors like DataBricks or DataRobot.

Tools

- See sqoop import export

- https://github.com/hellonarrativ/spectrify - One-liners to: Python: Export a Redshift table to S3 (CSV), Convert exported CSVs to Parquet files in parallel, Create the Spectrum table on your Redshift cluster

Documentation / Reference

- Parquet Format explained: https://blog.twitter.com/2013/dremel-made-simple-with-parquet