Data Profiling Implementation in OWB

If you don't know anything about data profiling, you can have a look to the Data Profiling Page for a description.

Nothing is better than a video : OWB - Data Profiling Demo

Most organizations build a data warehouse to provide an integrated, reliable, and consistent “single version of the truth.” Data is usually sourced from a number of systems and has to be extracted, cleansed, and integrated before being made available for users to query.

The quality of the data loaded into the data warehouse is often variable, however, and for that reason, historically the process of profiling your source data has been a time-consuming, manual process that has required either lots of experience with SQL*Plus or the purchase of an expensive third-party tool.

With the release of Oracle Warehouse Builder 10g Release 2, however, the ability to profile your data is built into the tool and no knowledge of SQL*Plus is required. Furthermore, the data profiles that you build using Oracle Warehouse Builder can be used to generate automatic corrections to your data. In this article, you’ll learn all the nuances of this important new feature.

Data profiling is a prominent feature of Warehouse Builder 11g, allowing you to investigate data and detect anomalies. Then create business rules and generate a corrective ETL process based on these business rules.

Data Profiling can be defined as a process that automates the identification of problematic data and metadata while enabling the correction of inconsistencies, redundancies and inaccuracies in the data.

You can profile your source or target data to discover structure, relationships and data rules. Data Profiling provides statistical information about compliant data and outliers, including Six Sigma. You can drill down into the anomalies and derive data rules manually or automatically. You can use these data rules to monitor the quality of data in your data warehouse.

You can perform attribute analysis, referential analysis, functional dependency analysis or profile data against custom designed rules.

The steps are displayed in the following diagram.

In the initial step the source data is profiled and all the information the data holds is detected. In step 2 you derive some of the data rules and then use these derived rules to derive corrections (step 3). These corrections are regular Warehouse Builder mappings and you need to deploy those in step 4. The last step runs the correction mappings to cleanse the data in the target.

Articles Related

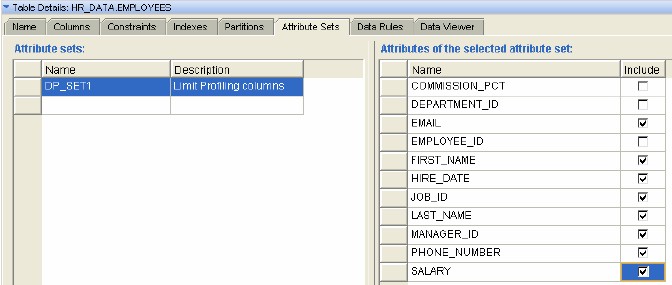

Table attribute sets

Use attribute sets in Warehouse Builder to determine which columns on a table are candidates for profiling, then in the profiling editor choose which ones you really are going to profile.

For example, do you really want to profile a system generated numerical key field out of the ERP system or use those resources to profile that varchar2(4000) column labeled “additional information”?

Even with the sample size at a small sample, you need to remember that a lot of the profiling resources are spent on working on column relationships. So again, don’t just profile every column and try to see if there are relationships between all of them, because you are not efficiently using the resources.

Data Profiling Tab

Documentation / Reference

- Oracle Data Watch and Repair for Master Data Management - Good description of each tab from the data profiling

- You can find the Oracle Warehouse Builder Data Profiling Reports Demonstration here: owb_home/owb/misc/dp_htmldb/README.html