Code - Testing (Software Quality Assurance|SQA|Validator|Checker)

About

A test is performed to verify that the system is conformed to the specification and is the most important part of code quality.

In a “test-driven software development (tdd)” model, unit tests are written first.

Testing is questioning a product in order to evaluate it.

Two general type of test:

- unit (application, functional)

- or system (hardware, load)

Testing can only reveal the presence of errors, not the absence of errors.

Of

Testing might demonstrate the presence of bugs very convincingly, but is hopelessly inadequate to demonstrate their absence.

If the customer is able to understand or even write the tests, than they can serve as a requirements collection. If the tests are written in advance, they serve as a requirements specification. While implementing, they give feedback how far we are. They delivers an up-to-date documentation.

There are trade-offs between testing/QA effort vs wiki/confidence.

The ease of testing a function is inversely proportional to the number of levels of abstraction it deals with.

The three most common programming errors are:

Three common programming errors:

If debugging is the process of removing bugs, then programming must be the process of putting them in.

You are never sure a design is good until it has been tested by many. Be prepared and eager to learn then adapt. You can never know everything in advance.

Articles Related

Why test ?

Why test, it allows ?

- to work in parallel

- to get a working branch. How many times did we checkout a branch to create a new feature and that the codebase was not working

- cost less

- structure the code. If you cannot do a test, it means that your function is too complicate and must be splits.

- to improve documentation. A test demonstrate minimal interaction with the code.

- to increase observability

- to follow the planning

- to not be afraid of merge.

Work in parallel

Work in Parallel: People are not comfortable when code development happens in parallel without test. The basic strategy is to give an owner to the entire code. The code is then just locked by one person.

The below email is a beautiful example:

Traduction:

Dear Nico,

To be sure, you cannot yet begin with this change:

the PHP and the Oracle parts are still under control.

Cost effective

If it costs 1 to find and fix a requirement-based problem during the requirements definition process, it can cost 5 to repair it during design, 10 during coding, 20 during unit testing, and as much as 200 after delivery of the system. Thus, finding bugs early on in the software life cycle pays off.

Documentation

Testing is like documentation. More isn’t necessarily better.

Quality Software Properties

Test

Data Modeling

Generating test data that is sparse, incomplete, and in some cases fully complete and fully correct allows testing of:

- the domain alignment

- the normalization,

- zero-key generation,

- elimination of duplicates,

- etc…

Process Flow

- the start and end-dates of specific records (ie: the delta processing detection),

- Data Reconciliation (known also as balancing) between all area (between the source system and the staging area, the operational data store and the staging area, …)

- the transactional volume. If you receive 100 records by day and then suddenly 20, you may have an error in the change data capture process.

When you can't directly access the data source (e.g. web-service data source), the data can be stored in a staging area just for testing purposes later.

Volume tests

Volume tests is a functional test via a high volume of generated data to make sure:

- the partitioning is right

- the performance is acceptable

- the indexing works

and to test:

- the joins

- and the load cycle performance as well.

Type of test

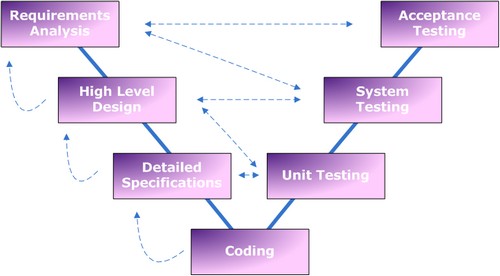

Testing level

Tests are frequently grouped by where they are added in the software development process, or by the level of specificity of the test.

- Integration testing. Works to expose defects in the interfaces and interaction between integrated components (modules).

- System integration testing verifies that a system is integrated to any external or third party systems defined in the system requirements.

- System testing. Tests a completely integrated system to verify that it meets its requirements.

- Acceptance testing can mean one of two things:

- A smoke test is used as an acceptance test prior to introducing a new build to the main testing process, i.e. before integration or regression.

- Acceptance testing performed by the customer, often in their lab environment on their own hardware, is known as user acceptance testing (UAT). Acceptance testing may be performed as part of the hand-off process between any two phases of development.

Unit testing

Unit testing refers to tests that verify the functionality of a specific section of code, usually at the function level. In an object-oriented environment, this is usually at the class level, and the minimal unit tests include the constructors and destructors

Functional tests

Example of functional test: A table must shows only 20 rows and shows No Data in case of empty table.

| Functional Test | Number | Case |

|---|---|---|

| 1 | 1 | no data |

| 1 | 2 | one row |

| 1 | 3 | 5 rows |

| 1 | 4 | exactly 20 rows |

| 1 | 5 | 21 rows |

| 1 | 6 | 40 rows |

| 1 | 7 | 41 rows |

By manipulating the data in the database for each case, you can't run test concurrently. The whole functional test is difficult to do but easy and quick for a unique unit test. A unit test can easily call the table rendering and assert the proper “paging” without even having a database

Regression testing

Regression testing is any type of software testing that seeks to uncover software errors by partially retesting a modified program. The intent of regression testing is to provide a general assurance that no additional errors were introduced in the process of fixing other problems. Regression testing is commonly used to efficiently test the system by systematically selecting the appropriate minimum suite of tests needed to adequately cover the affected change. Common methods of regression testing include rerunning previously run tests and checking whether previously fixed faults have re-emerged. “One of the main reasons for regression testing is that it's often extremely difficult for a programmer to figure out how a change in one part of the software will echo in other parts of the software.

“Regression tests” is the concept of testing that asserts that everything that worked yesterday still works today. To achieve this, the tests must not be dependent on random data.

Non-functional testing

Performance testing

Performance testing is in general testing performed to determine how a system performs in terms of responsiveness and stability under a particular workload.

Performance testing is executed to determine how fast a system or sub-system performs under a particular workload.

It can also serve to validate and verify other quality attributes of the system, such as:

- and resource usage.

Load testing is primarily concerned with testing that can continue to operate under a specific load, whether that be:

- large quantities of data

- or a large number of users.

This is generally referred to as software scalability. The related load testing activity is often referred to as endurance testing.

- Volume testing is a way to test functionality.

- Stress testing is a way to test reliability.

- Load testing is a way to test performance.

There is little agreement on what the specific goals of load testing are. The terms load testing, performance testing, reliability testing, and volume testing, are often used interchangeably.

Others

- Stability testing. checks to see if the software can continuously function well in or above an acceptable period. This testing is oftentimes referred to as load (or endurance) testing.

- Security testing. processes confidential data to prevent bad data security and system intrusion by hackers

- Internationalization and localization. It will verify that the application still works, even after it has been translated into a new language or adapted for a new culture (such as different currencies or time zones).

- Destructive testing attempts to cause the software or a sub-system to fail, in order to test its robustness.

- Usability testing. check if the user interface is easy to use and understand.

Data-Driven testing

In a data-driven test, you will store test data (input, expected output, etc) in some external storage (database, spreadsheet, xml-files, etc) and use them iteratively as parameter in your tests.

Concurrent user

How do you determine “Concurrent” users?

- Oracle adopt the 10-20% rule:'

- 10-20% of users will be logged in to Oracle BI at any one time

- 10-20% of those users logged in will actually be running a query

So for 3000 “Total” users, you could expect concurrency of between 30 and 120 “Concurrent users”

Take time zones into account e.g. for a “Global” implementation divide the “Total” number of users by 3.

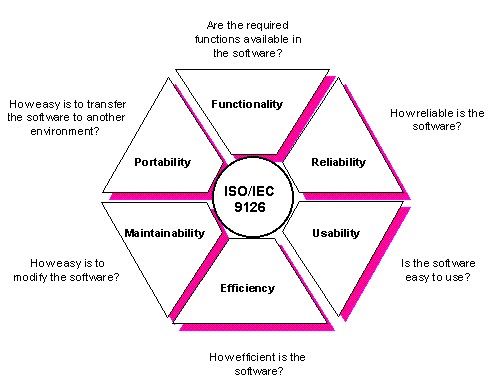

ISO

Model

Glossary

- SAT = site acceptance test

- FAT = Factory acceptance test

- UAT = User acceptance test

- USR = User system requirement

USR (URS) - User System Requirements (User Requirements Specification)

These must be the same thing. This should be a document explaining exactly what the user (customer) wants from the system. Not how it's going work, but what it's going to do. “I want a machine to make coffee, and it must have the option for white, black, espresso and chicken soup. Oh, and sugar. Oh and it must have two different sizes of cups. And a biscuit dispenser.” etc etc. This should be signed off between supplier and customer at the outset so there is an agreed set of deliverables that can be measured at the end. (Things like two different sizes of cups is not a good example. These should be specified in fluid ounces, millilitres whatever. This is a specification document, not just a description.)

FDS - Functional Design Specification

Here the supplier is converting the needs (the 'what') in to the methods (the 'how'). This document defines for him how he intends to fulfil the URS. At the same time, and following on from the URS, some form of test specification should be beginning to emerge. How can we tell if it's working OK? What will be the agreed measures?

FAT - Factory Acceptance Test

Once the machine/system/software has been assembled at the supplier's factory there should be a formal test with checkpoints and performance measures to prove that it is capable of meeting the URS. NB This does not mean it has to meet the URS there and then. That may be impossible until it's finally plumbed in on site. Very often customers will ask to be present at the FAT to witness this before giving permission for the system to be shipped to their site. Some projects will have stage payments on successful completion of a witnessed FAT.

UAT - User Acceptance Test

Carried out at user's premises with equipment installed and ready to run. Must prove that it can do everything agreed in the URS. This is the point where the user satisfies himself that the system is substantially as requested and accepts delivery of the equipment. From that point he gets invoiced for the system. Further work may continue by the supplier, but this should be agreed and defined in change notices or deviations to original specifications, and I would expect most of this will represent an additional charge.

Why not test

- 1- too hard to setup, it needs to be easy (For instance, UI Code - it's hot but not impossible))

- 2- the code is simple (is self documenting, maintanable)

- 3- to hard to mock (EasyMock, Mockito, JMock)

- 4- Not enough time

- 5- Effort vs value (ROI)

- 6- I'm the only developer

Example of Job Description

- Description: Software Quality Assurance Engineer, Senior position available at our office

- DUTIES: Work on complex problems of diverse scope where analysis of situation or data requires in depth evaluation of various factors which may be difficult to define, develop quality standards for Yahoo products, certification & execution of software test plans & analysis of test results. Write test cases, develop automated tests, execute test cases and document results, and work closely with engineers and product managers while writing defect descriptions. Define testing strategy, test plan, and come up test cases for Search BE/API. Implement test cases in Java code utilizing existing test framework. Write automation code. Utilize automated test suite to drive on functional test, certify release candidate, and provide sign off for weekly releases. Enhance automation tools and continuously improve team efficiency.

- REQUIREMENTS: Bachelor’s degree in Computer Science, Engineering or related technical field followed by 8 years of progressive post-baccalaureate experience in job offered or a computer-related occupation. In the alternative, employer will accept a Master’s degree in Computer Science, Engineering or related technical field and 6 years of experience in job offered or a computer-related occupation.

- Experience must include:

- 8 years (or 6 years if Master’s degree) of experience testing highly scalable Web applications with project lead role in complex projects

- 4 years (or 3 years if Master’s degree) of Quality Assurance automation experience in front-end and back-end automation framework such as Selenium, TestNG, Maven, Hudson, version control/GIT, test case management, and bug tracking

- 3 years of coding experience in Java or C++ and/or PHP

- At least 1 year of experience in software development

- Knowledge of Quality Assurance processes and methodology

- Agile software development team.

First Law of Software Quality

<MATH> \begin{array}{lrl} \text{errors} & =& (\text{more code})^2 \\ e & = & mc^2 \end{array} </MATH>