Definition

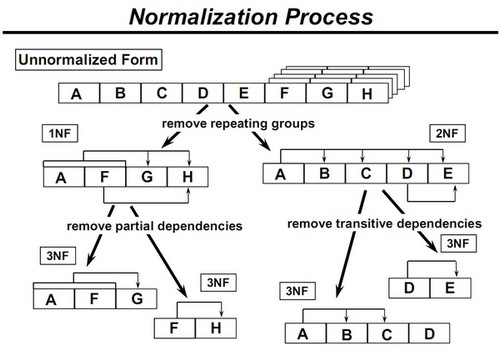

Normalization is a logical data base design method. Normalization is a process of systematically breaking a complex table into simpler ones. It is built around the concept of normal forms.

Database normalization is a data normalization process that reduce data to its canonical form.

Articles Related

Purposes of Normalization

In the design of a data model, normalization is the process of adjusting table and relations to:

- eliminate certain types of data (redundancy|replication) to improve consistency,

- produce a clearer and readable data model.

- provide maximum flexibility to meet future information needs by keeping tables corresponding to object types in their simplified forms.

- avoid update anomalies

An update anomaly is a problem when:

- inserting (no place to insert new information),

- deleting (lost of information),

- or updating (inconsistency may occur because of the existence of data redundancy)

a database because of the structure of the relations.

Normalization: Pros and Cons

Pros

- Reduce data redundancy & space required

- Enhance data consistency

- Enforce data integrity

- Reduce update cost

- Provide maximum flexibility in responding ad hoc queries

- Allow the use of parallelism,

- Can reduce the total number of rows per block.

Improve:

Cons

- Many complex queries will be slower because joins have to be performed to retrieve relevant data from several normalized tables

- Programmers/users have to understand the underlying data model of an database application in order to perform proper model of an database application in order to perform proper joins among several tables

- The formulation of multiple-level queries is a non-trivial task.

Normalization steps

3NF/2NF Normalization Example

An example of a 2NF table that fails to meet the requirements of 3NF is:

Tournament Winners Tournament

| Tournament | Year | Winner | Date of Birth |

|---|---|---|---|

| Indiana Invitational | 1998 | Al Fredrickson | 21 July 1975 |

| Cleveland Open | 1999 | Bob Albertson | 28 September 1968 |

| Des Moines Masters | 1999 | Al Fredrickson | 21 July 1975 |

| Indiana Invitational | 1999 | Chip Masterson | 14 March 1977 |

Because each row in the table needs to tell us who won a particular Tournament in a particular Year, the composite key {Tournament, Year} is a minimal set of attributes guaranteed to uniquely identify a row. That is, {Tournament, Year} is a candidate key for the table.

The breach of 3NF occurs because the non-prime attribute Winner Date of Birth is transitively dependent on the candidate key {Tournament, Year} via the non-prime attribute Winner. The fact that Winner Date of Birth is functionally dependent on Winner makes the table vulnerable to logical inconsistencies, as there is nothing to stop the same person from being shown with different dates of birth on different records.

In order to express the same facts without violating 3NF, it is necessary to split the table into two:

Tournament Winners

| Tournament | Year | Winner |

|---|---|---|

| Indiana Invitational | 1998 | Al Fredrickson |

| Cleveland Open | 1999 | Bob Albertson |

| Des Moines Masters | 1999 | Al Fredrickson |

| Indiana Invitational | 1999 | Chip Masterson |

Player Dates of Birth

| Player | Date of Birth |

|---|---|

| Chip Masterson | 14 March 1977 |

| Al Fredrickson | 21 July 1975 |

| Bob Albertson | 28 September 1968 |

Update anomalies cannot occur in these tables, which are both in 3NF.