, such as Hive_ccMapR.)

Information from the cluster (needed for during the configuration of the Big Data Management instance)

* HDInsight Cluster Hostname: Name of the HDInsight cluster where you want to create the Informatica domain.

* HDInsight Cluster Login Username: User login for the cluster. This is usually the same login you use to log in to the Ambari cluster management tool.

* Password: Password for the HDInsight cluster user.

* HDInsight Cluster SSH Hostname: SSH name for the cluster.

* HDInsight Cluster SSH Username: Account name you use to log in to the cluster head node.

* Password: Password to access the cluster SSH host.

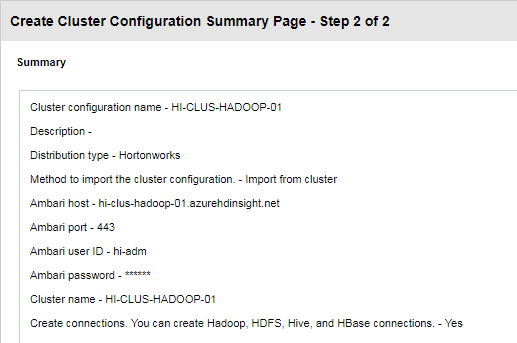

* [[db:hadoop:ambari:ambari|Ambari]] port: Port to access the Ambari cluster management web page. Default is 443.

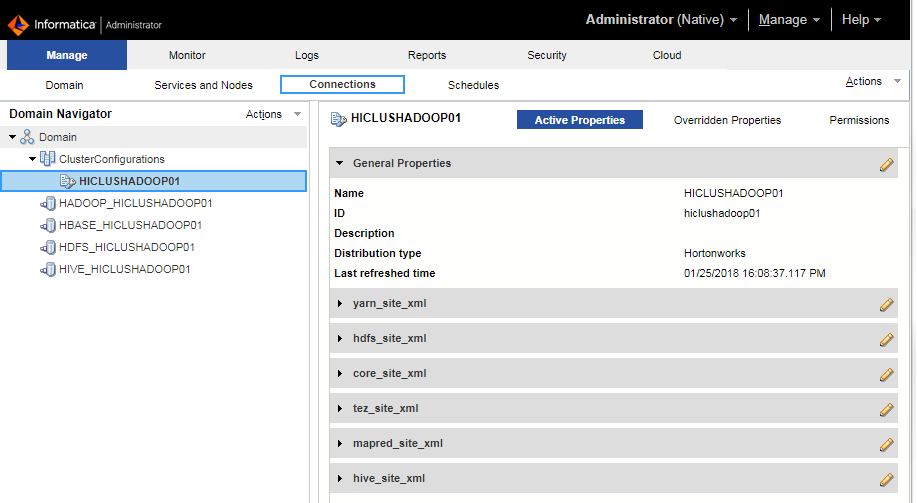

When you perform the import, the cluster configuration wizard create:

* Hadoop,

* HBase,

* HDFS,

* and Hive connections

to access the Hadoop environment (if you choose it).

When you perform the import, the cluster configuration wizard create:

* Hadoop,

* HBase,

* HDFS,

* and Hive connections

to access the Hadoop environment (if you choose it).

* In the HDFS storage location, create the following directories on the cluster and set permissions to 777:

* In the HDFS storage location, create the following directories on the cluster and set permissions to 777:

* Blazeworkingdir

* SPARK_HDFS_STAGING_DIR

* SPARK_EVENTLOG_DIR

==== Decryption ====

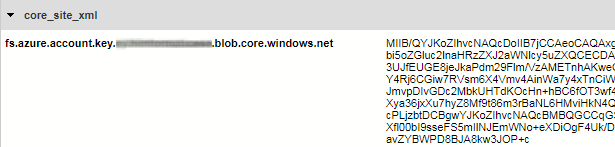

To be able to decrypt the storage azure key in core-site.xml, you need to pass the decryption key.

The decryption key is send inside the application to the node, you need to do this operation each time the cluster is (re)created

As root

mkdir /usr/lib/hdinsight-commonmkdir /usr/lib/hdinsight-common/scripts/mkdir /usr/lib/hdinsight-common/certs/

Copy the below file from the cluster to the BDM and gives to the user powercenter the ownership.

As root

mkdir /usr/lib/hdinsight-commonmkdir /usr/lib/hdinsight-common/scripts/mkdir /usr/lib/hdinsight-common/certs/

Copy the below file from the cluster to the BDM and gives to the user powercenter the ownership.

/usr/lib/hdinsight-common/scripts/decrypt.sh/usr/lib/hdinsight-common/certs/key_decryption_cert.prv

Example from the tmp directory:

cp /tmp/decrypt.sh /usr/lib/hdinsight-common/scripts/cp /tmp/key_decryption_cert.prv /usr/lib/hdinsight-common/certs/chown -R powercenter:powercenter /usr/lib/hdinsight-commonchmod +x /usr/lib/hdinsight-common/scripts/decrypt.sh

==== Host file ====

* Add an entry for the headnodehost in the /etc/hosts file

Example:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.0.0.16 headnodehost hn0-HI-CLU hn0-hi-clu.3qy321ubaea5iyw5joamf.ax.internal.cloudapp.net

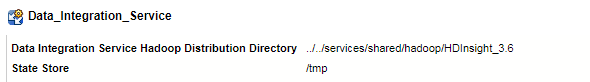

==== Hadoop Distribution ====

In the DIS configuration, be sure to have the good path in the property “Data Integration Service Hadoop Distribution Directory” It must be set to “HDInsight_3.6”.

==== Node ODBC ====

To be able to get or push data from ODBC on each node:

* ODBC must be installed

* and the informatica environment must be set

=== Node ODBC installation ===

* Connect to each node (or create a bash file) with the hdinsight sshuser and performs the following as root (or with sudo)

cd /tmpwget http://www.unixodbc.org/unixODBC-2.3.6.tar.gztar xvf unixODBC-2.3.6.tar.gzcd unixODBC-2.3.6/. ./configuremakemake installexport LD_LIBRARY_PATH=/usr/local/lib/

=== Node ODBC environment variable ===

The file hadoopEnv.properties must have ODBC environment configuration parameters to be able to create ODBC mapping from or to a Hive Mapping. See ERROR: “RR_4036 Check the ODBCINI environment variable

The file is located on the BDM machine at /home/powercenter/services/shared/hadoop/HDInsight_3.6/infaConf/hadoopEnv.properties

==== Node ODBC ====

To be able to get or push data from ODBC on each node:

* ODBC must be installed

* and the informatica environment must be set

=== Node ODBC installation ===

* Connect to each node (or create a bash file) with the hdinsight sshuser and performs the following as root (or with sudo)

cd /tmpwget http://www.unixodbc.org/unixODBC-2.3.6.tar.gztar xvf unixODBC-2.3.6.tar.gzcd unixODBC-2.3.6/. ./configuremakemake installexport LD_LIBRARY_PATH=/usr/local/lib/

=== Node ODBC environment variable ===

The file hadoopEnv.properties must have ODBC environment configuration parameters to be able to create ODBC mapping from or to a Hive Mapping. See ERROR: “RR_4036 Check the ODBCINI environment variable

The file is located on the BDM machine at /home/powercenter/services/shared/hadoop/HDInsight_3.6/infaConf/hadoopEnv.properties

infapdo.env.entry.odbcini=ODBCINI=HADOOP_NODE_INFA_HOME/ODBC7.1/odbc.iniinfapdo.env.entry.odbcini=ODBCHOME=HADOOP_NODE_INFA_HOME/ODBC7.1

==== Operations after each recreation of the cluster ====

* Update the cluster information with the BDM admin console. Connection > Actions > Refresh Cluster Configuration

* Add or update the entry for the headnodehost in the /etc/hosts file

* Copy the private key from the cluster to BDM in the same directory location

/usr/lib/hdinsight-common/certs/key_decryption_cert.prv

===== Support =====

==== Error establishing socket to host and port: hostname.database.windows.net:1433. Reason: DISABLED (No such file or directory) ====

You can get this error when running a silent installation.

Test Connection Exception -java.sql.SQLNonTransientConnectionException: [informatica][SQLServer JDBC Driver]Error establishing socket to host and port: hostname.database.windows.net:1433. Reason: DISABLED (No such file or directory)

Possible resolution:

* You are trying to install a secure domain but the property TRUSTSTORE_DB_FILE in the SilentInputProperties is not set.

The TRUSTSTORE_DB_FILE indicates the path and file name of the truststore file forthe secure domain configuration repository database. If the domain that you createor join uses a secure domain configuration repository, set this property to thetruststore file of the repository database.TRUSTSTORE_DB_FILE=/path/to/myCacertsTRUSTSTORE_DB_PASSWD to the password for the truststore file for the secure domainconfiguration repository database.TRUSTSTORE_DB_PASSWD=changeit

===== Documentation / Reference =====

* BDM MarketPlace

* Implementing Big Data Management 10.2 with Ephemeral Clusters in a Microsoft Azure Cloud Environment

Installation

Open the node Domain > ClusterConfigurations Right Click > Import With Cluster Connection (if with file convention: _