About

Stream processing is the reactive design of data processing.

- Something happened (Event),

- Subscribe to it (Streams)

Streaming Processing is also known as :

- or reactive stream (the reactive specification)

It's called stream processing because the events are based on a continuous arrival of data, logically name data streams.

Model

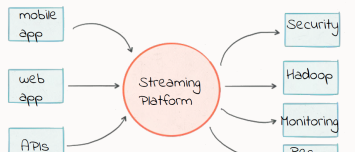

A stream processing flow is a combination of:

- an input source

- an output destination.

- and a sequence of data (that may be a byte of an object). In the general sense, it's called a message.

- that may be chained to form a pipeline

A pipeline is a query on a stream.

Pipeline

Streams are a flexible system to implement a pipeline of data transformations.

- filter/map (stateless)

- Aggregate

- Stream / Table join (change log streams)

- Window join (message buffer, co-partionning)

- Reprocessing (Batch and streaming convergence)

System Characteristics

It has a low memory and processing overhead.

This performance comes at a cost

- All content to read/write has to be processed in the exact same order as input comes in (or output is to go out).

- No random access. For this, you need to build an auxiliary structure such as a map or a tree.

Problems

A messaging system is a fairly low-level piece of infrastructure—it stores messages and waits for consumers to consume them. When you start writing code that produces or consumes messages, you quickly find that there are a lot of tricky problems that have to be solved in the processing layer.

Consider the counting example, above (count page views and update a dashboard).

- What happens when the machine that your consumer is running on fails, and your current counter values are lost? How do you recover?

- Where should the processor be run when it restarts? What if the underlying messaging system sends you the same message twice, or loses a message? (Unless you are careful, your counts will be incorrect.)

- What if you want to count page views grouped by the page URL? How do you distribute the computation across multiple machines if it’s too much for a single machine to handle?

Stream processing is a higher level of abstraction on top of messaging systems, and it’s meant to address precisely this category of problems.

See Samza is a stream processing framework that aims to help with these problems.

Implementation

As most of asynchronous system, stream processing is implemented with an event loop

Queue:

- Multiple input processor

Routing:

- Category variable

Processor selection through:

- Category variable

- Data Type

Structure Flow:

- Flow Content + Json Object for extra attributes (Ex: ResultSet + TestId)

Loop through an explicit processor (ie if and then go to step N)?

- Lazy instantiation: The operations are performed with an execute() call. Whether the program is executed locally or on a cluster depends on the type of execution environment. The lazy evaluation lets construct execution plan that may differ from the lexical (code) definition.

It is our firm belief that in the future, the majority of data applications, including real-time analytics, continuous analytics, and historical data processing, will treat data as what it really is: unbounded streams of events.

Stream vs Batch

See Stream vs Batch