About

A thread is a child unit of execution of a process (also known as the OS - Main Thread). Threads exist within a process. A process acts as a container for threads.

A process is a unit of resources, while a thread is a unit of:

- scheduling

- and execution.

Threads are also known as lightweight processes whereas a process is a “heavyweight” unit of kernel scheduling, as creating, destroying, and switching processes is relatively expensive.

Threads are effectively processes that run in the same memory context and share other resources with their parent processes, such as open files.

The threads independently execute code that operates on values and objects residing in a shared main memory.

Threads may be supported:

- by having many hardware processors,

- by time-slicing a single hardware processor,

- or by time-slicing many hardware processors.

Multithreading is a widespread programming and execution model that allows multiple threads to exist within the context of a single process.

An Hardware thread is a thread of code executing on a logical core.

Both processes and threads provide an execution environment, but creating a new thread requires fewer resources than creating a new process.

Threads share the process's resources, including memory and open files. This makes for efficient, but potentially problematic, communication.

Every application has at least one thread — or several (Multithreaded execution), if you count “system” threads that do things like memory management and signal handling.

A thread is a sequence of instructions that execute sequentially. If there are multiple threads, then the processor can work on one for a while, then switch to another, and so on.

In general the programmer has no control over when each thread runs; the operating system (specifically, the scheduler) makes those decisions. As a result, again, the programmer can’t tell when statements in different threads will be executed.

Although threads seem to be a small step from sequential computation, in fact, they represent a huge step. They discard the most essential and appealing properties of sequential computation:

Threads, as a model of computation, are wildly non-deterministic, and the job of the programmer becomes one of pruning that non-determinism.

Concurrency

Threads in the same process share the same address space. This allows exchange data without the overhead or complexity of an IPC.

Model

Synchronous

Synchronous I/O: Many networking libraries and frameworks rely on a simple threading strategy: each network client is being assigned a thread upon connection, and this thread deals with the client until it disconnects.

Too many concurrent connections will hurt scalability as system threads are not cheap, and under heavy loads an operating system kernel spends significant time just on thread scheduling management.

In Java, this is the case

Asynchronous

See Event loop

State

Process

A thread is the child of a process

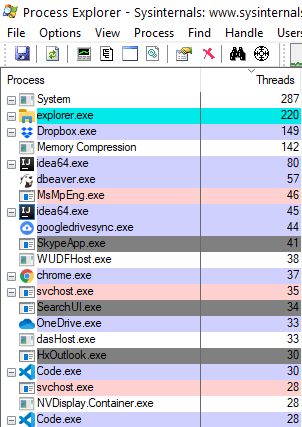

Example: in Process Explorer

Hyperthreading

With 48 physical cores, if you consider hyper-threading you get 96 logical cores and therefore a maximum of 96 threads.