About

Data integrity control is essential in ensuring the overall consistency of the data in your information system's applications.

Application data is not always valid for the constraints and declarative rules imposed by the information system. You may, for instance, find orders with no customer, or order lines with no product, etc.

Oracle Data Integrator provides a working environment to detect these constraint violation and store them for recycling or reporting purposes.

Articles Related

Type of Control

There are two different types of controls:

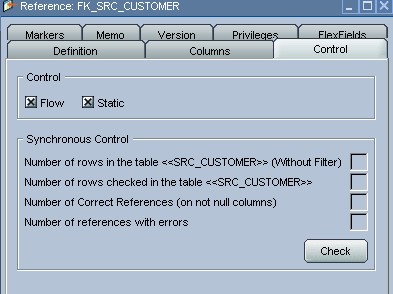

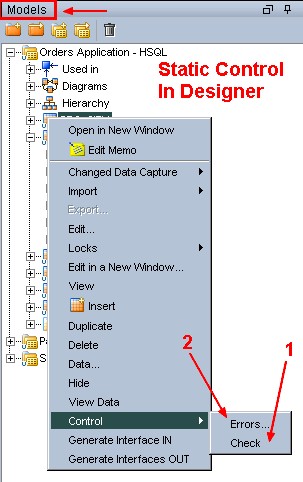

- Static Control

- and Flow Control.

Static

Static Control implies the existence of rules that are used to verify the integrity of your application data. Some of these rules (referred to as constraints) may already be implemented in your data servers (using primary keys, reference constraints, etc.)

With Oracle Data Integrator, you can refine the validation of your data by defining additional constraints, without declaring them directly in your servers. This procedure is called Static Control since it allows you to perform checks directly on existing - or static - data.

Flow

The information systems targeted by transformation and integration processes often implement their own declarative rules. The Flow Control function is used to verify an application's incoming data according to these constraints before loading the data into these targets.

Benefits

The main advantages of performing data integrity checks are as follow:

- Increased productivity by using the target database for its entire life cycle. Business rule violations in the data slow down application programming throughout the target database’s life-cycle. Cleaning the transferred data can therefore reduce application programming time.

- Validation of the target database’s model. The rule violations detected do not always imply insufficient source data integrity. They may reveal a degree of incompleteness in the target model. Migrating the data before an application is rewritten makes it possible to validate a new data model while providing a test database in line with reality.

- Improved quality of service for the End-users will benefit from using data that has been pre-treated to filter out business rule violations.

Ensuring data integrity is not always a simple task. Indeed, it requires that any data violating declarative rules must be isolated and recycled. This implies the development of complex programming, in particular when the target database incorporates a mechanism for verifying integrity constraints. In terms of operational constraints, it is most efficient to implement a method for correcting erroneous data (on the source, target or recycled flows) and then to reuse this method throughout the enterprise.